Choosing an AI IDE

Earlier this month, I had the opportunity to review several AI IDEs. I was asked to recommend an AI assistant for a team. This post is a summary of my approach & findings. You probably will not learn anything earth shattering but I hope you find it useful to help clarify your own thought processes.

Criteria: Cost

I dismissed cost early on in the exercise. Most of the assistants are comparable.

Step 1 Compare AI Models

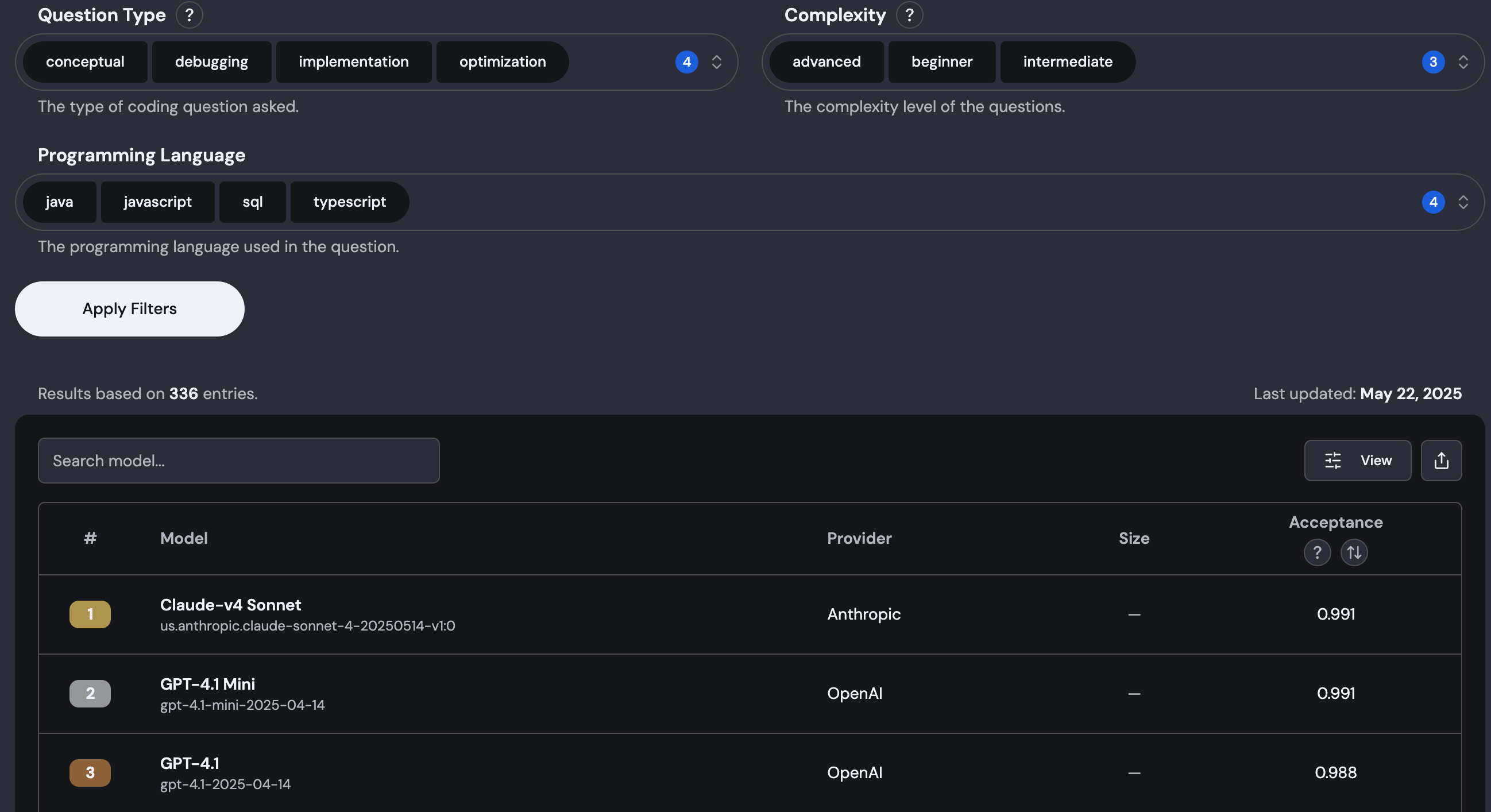

I started by comparing models across different criteria & languages using sites like https://www.prollm.ai/.

As you can see, for my chosen stack, Claude Sonnet was the winner. But the others were so close that the comparison was meaningless. For my needs, a 2 decimal difference was as good as no difference at all.

Step 2: Ask AI

Then, I asked Google Gemini to make me a report. My prompt:

Come up with the criteria an AI IDE should have. Assign weights to each and then use them to compare the IDEs available today. Support for [my stack here] should get special mention.

Gemini produced a comprehensive report and this was more useful. It rated Cursor & Github Copilot as the best & provided articles as reference.

The problem: many of the articles it used as reference were paid ones: marketing material from the companies themselves.

I repeated the exercise asking Gemini to ignore such sources & Cursor & Copilot still stayed on top.

Step 3: Manually verify

At about this time, I was asked to include Amazon Q in the comparison.

I decided to do a comparison for myself. For my tests, I chose Windsurf, Copilot, Cursor, TabNine & Amazon Q

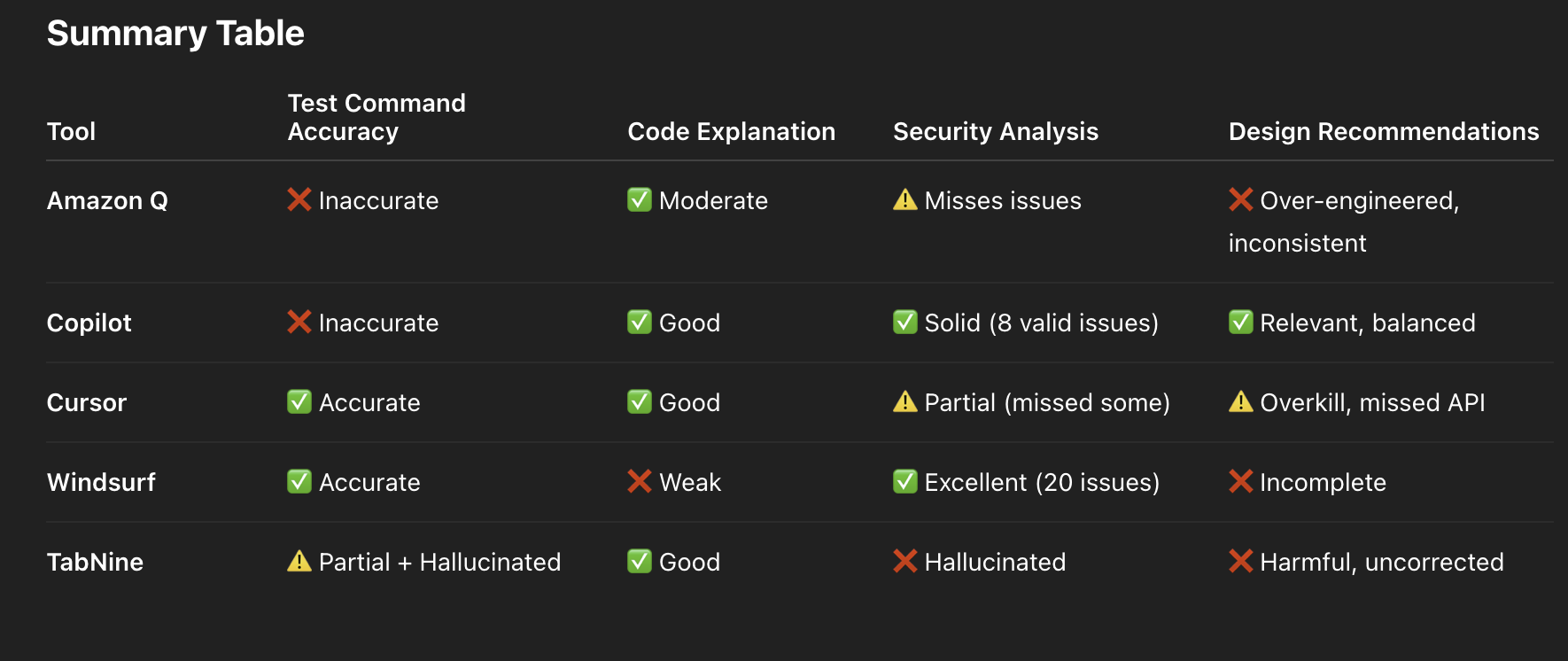

My testing involved the following parameters:

- Gave it a simple React component as context & asked it to run the unit test

- Gave it a JSP and asked it to explain the code to me & list all the APIs the JSP invoked

- The JSP had several security issues I was already aware of. I asked each AI assistant to review the code & list all security issues it could find

- Finally, I asked each AI assistant how it would redesign the usecase: what APIs would it add, modify or delete.

The results have been summarised below. Copilot & Cursor were again the best of the lot. Next came Windsurf, then Amazon. I was quite disappointed with TabNine’s output and dropped it. Amazon Q felt clunky & difficult to use (It was not very customisable, took over the IDE and I had to remove it to be able to work as before). Windsurf, while it did very well in one area, was also dropped since it didn’t feel as polished as Cursor.

At this point, I had two close contenders & needed to make a choice.

Step 4. Revelations

While this exercise was happening, I was also using AI for my own work & observing how members of my team used AI for their work.

This was when I had two revelations

- Most developers I saw weren’t using the assistants well at all.

- How well an AI assistant performed at one task wasn’t enough. I also need to ask: How easy was it to use as a beginner? How much friction did it cause? How easy was it to learn to use it well?

Revelation 1: Developers are the bottleneck

One example: Several developers were copying code snippets & pasting them in ChatGPT on the browser and asking it to solve the issue providing very terse & obscure instructions.

error hereUserDAO is not finding session_id. What do I do?

( What should the user have done?

This kind of question needed knowledge of the codebase which an IDE-based assistant can provide better)

To its credit, ChatGPT did a great job assuming the context and provided somewhat valid suggestions

Another example: Giving the AI a too vague or too broad instruction.

Run

npm run eslint. Fix all the errors you find.

Why is this a problem?

AI assistants work best when they are provided enough context & clear rules. Most times when such broad instructions were given, the AI struggled with reading the output of the command prioritising what it should do. The user would invariably find more issues than they started with or the AI would go into a loop vomitting unnecessary code at the problems

One part of the problem here are developers themselves. They’d not invested the time & efforts necessary to experiment & learn how to use AI well. This could be solved by training developers on Prompt engineering. Another part was related to the 2nd revelation - how well designed was the assistant?

Revelation 2: AI Assistant Maturity

Is the AI assistant easy to use? Does it make the user better at using it over time?

This finally was the differentiator I was looking for and was the point where Copilot fell down the rankings to 2.

Cursor, Windsurf and others like it are iterating quickly. They introduced features like memories, rules which have made us developers better at using AI for our work. Copilot is catching up but is still behind.

Here are three simple but critical examples:

Example 1: UX

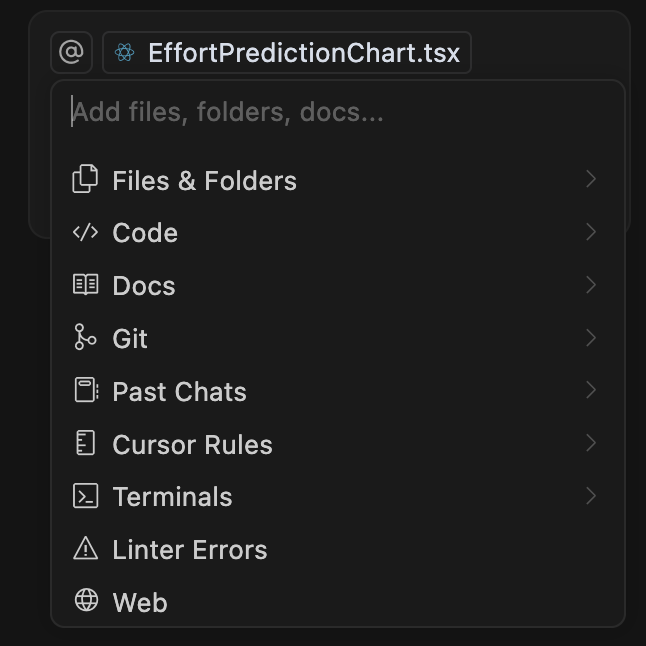

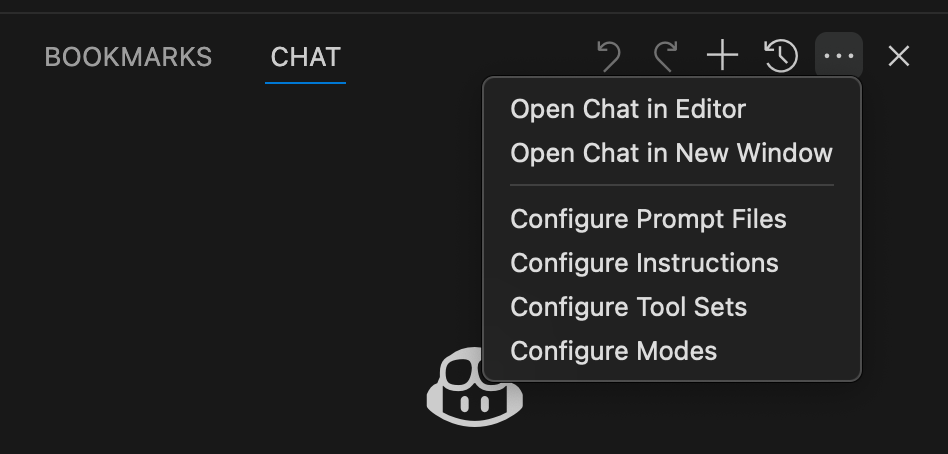

This is how & what Cursor allows you to add to a chat context

Notice that you stay where you are & can add code, folders, rules, terminals and a lot more.

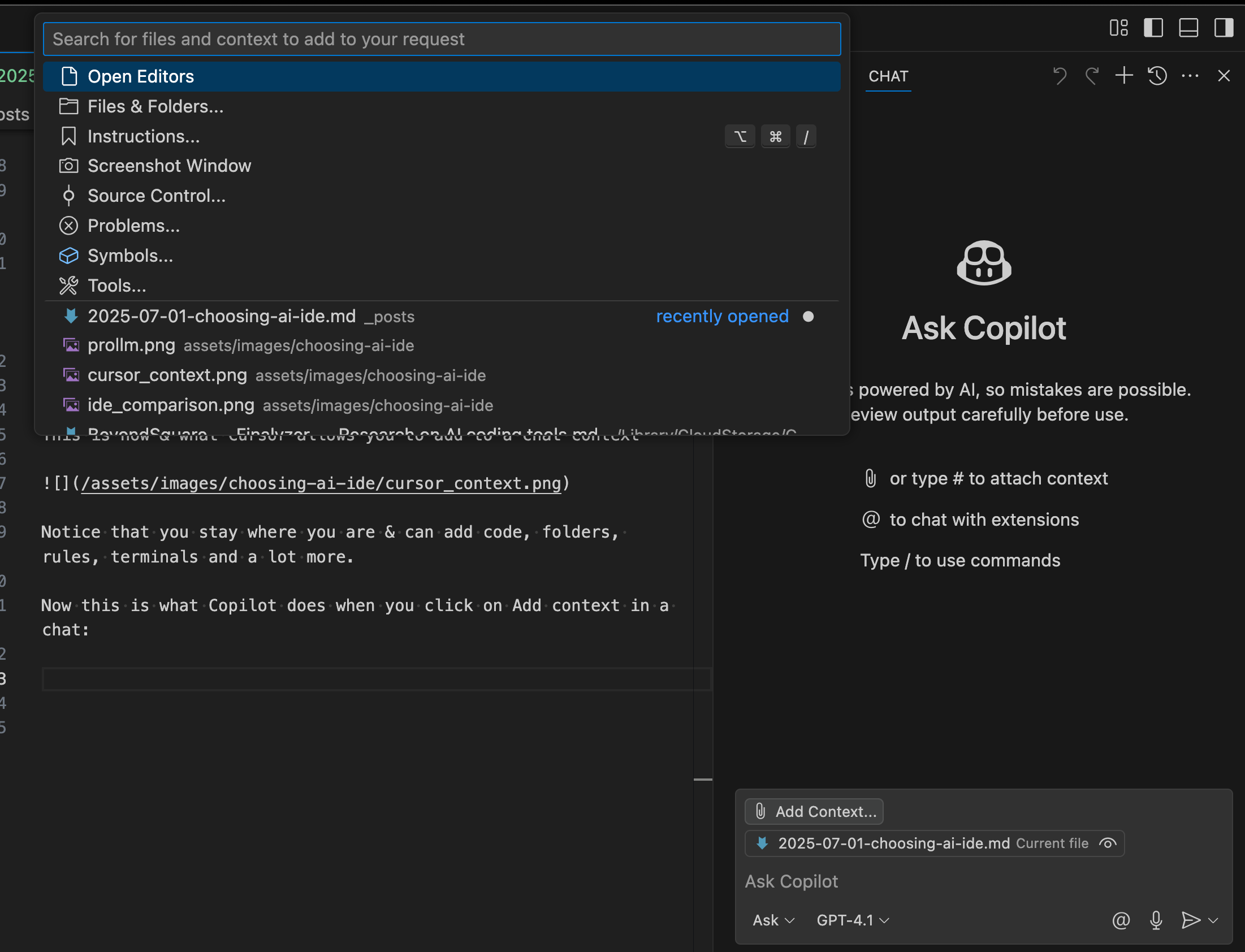

Now this is what Copilot does when you click on Add context in a chat:

Click on the button and you jump from the context window to somewhere else. Where you can only add code. And sometimes even after you’ve added a context & are conversing with the AI, it randomly forgets the context in favour of the file you’ve currently opened. To add instructions (copilot’s equivalent of rules), you navigate to a completely different location

Example 2: Autorun

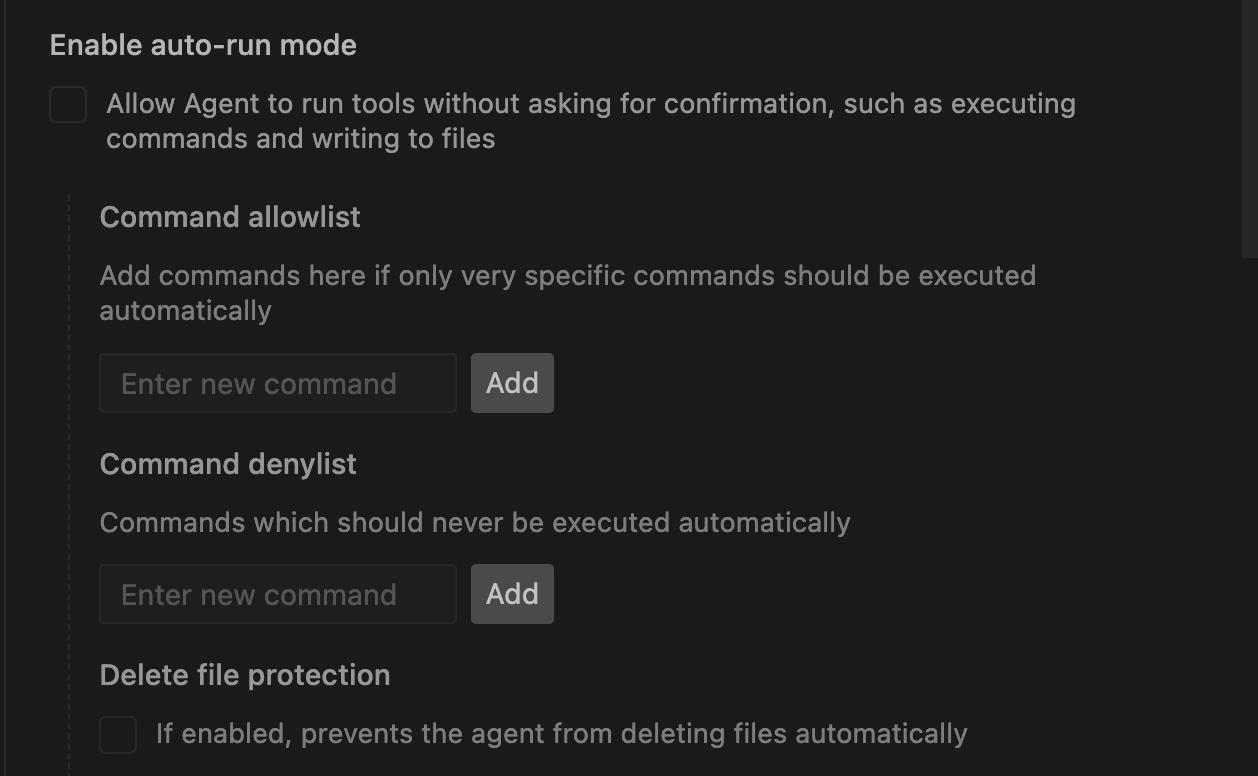

How I normally use an AI assistant these days is to help me improve the overall quality of a codebase. I experiment & iteratively improve a prompt to the point where the AI is doing exactly what I want it to do. I then ask it to go over the codebase & apply simliar changes to all the targets it finds. Then I go have a coffee. When I come back, the whole codebase is cleaned up & any workflow I’ve defined afterwards (ex: lint, tests) have been run.

Dedicated IDEs like Windsurf & Cursor does this beautifully.

For instance, in Cursor, the user is able to setup & control every aspect of “auto-run”. This feature has been present for months!

In contrast, there has been no such option yet in Copilot. It is irritating to keep clicking “Continue” every few minutes. (Coincidentally, Copilot may have got this feature today (30th June, 2025) Github issue)

Example 3: History

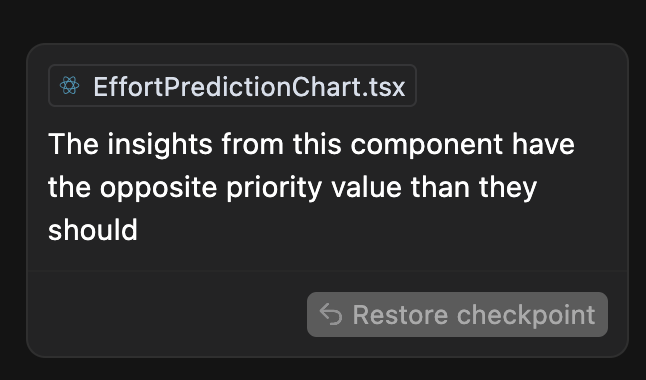

Sometimes I forget one of my own rules of keeping AI conversations short & the AI ends up doing something I need to revert. Cursor allows me to go back in history quite easily.

Copilot does not support this feature as of today. Github Issue

There are a few more such examples and I’m sure Copilot will catch up. But as of today, Dedicated IDEs like Windsurf & Cursor do a much better job than Copilot, which as a plugin probably can’t affect the IDE as much.

Conclusion

So, what AI assistant should you pay & buy an annual subscription for?

Answer: None of them. Instead, buy monthly subscriptions & experiment with any popular AI based assistant. All of them are iterating & improving. At this point, tying yourself to any one is a bad idea.

My recommendations

For now, I’ve chosen these tools as my current workflow:

- Replit for quick prototyping

- Cursor for productionising